As the 2024 election approaches, artificial intelligence (AI) is playing an increasingly significant role in shaping public opinion. AI-generated graphics, such as deepfakes and synthetic media, have the potential to drastically influence elections by creating hyper-realistic images and videos that are difficult to distinguish from reality. These visuals can manipulate voter sentiment, create false narratives, and spread misinformation at an unprecedented scale, ultimately undermining the democratic process.

In this blog post, I’ll explore how AI-generated graphics work, their potential impact on elections, and how you can spot these manipulative visuals to protect yourself and others from being misled.

What Are AI-Generated Graphics?

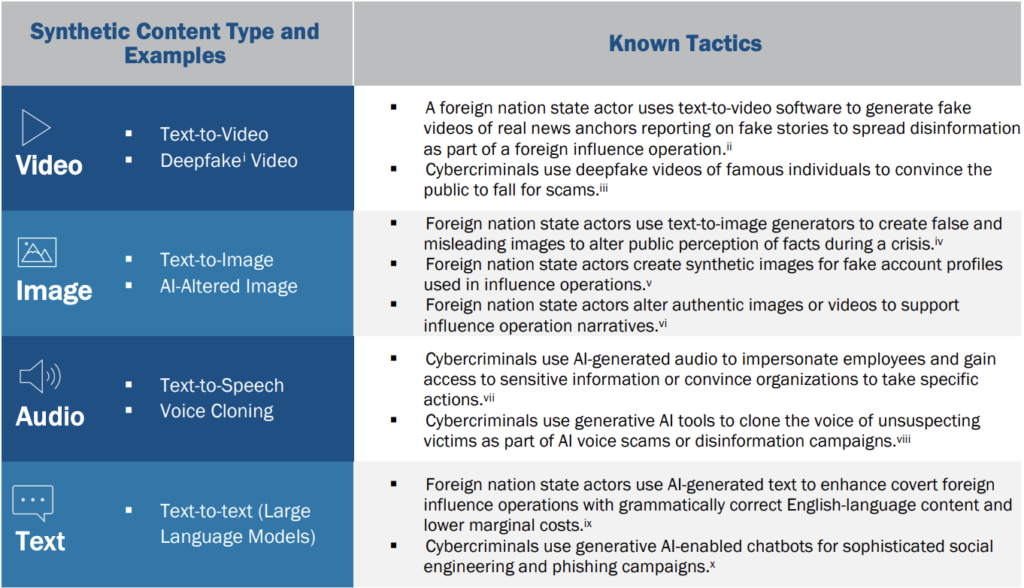

AI-generated graphics refer to images, videos, and audio created or altered by artificial intelligence algorithms, particularly through techniques like deep learning. One of the most advanced forms of AI-generated content is the deepfake, which can make people appear to say or do things they never did, often with a convincing level of realism. In elections, these visuals can be weaponized to spread false information about candidates or political events.

For example, AI tools like GANs (Generative Adversarial Networks) allow creators to generate hyper-realistic images that can be used to fabricate scenarios, such as showing a candidate participating in illegal activities. AI’s ability to alter reality poses significant risks to democracy by eroding public trust in authentic information (UNRIC, 2024).

The Impact of AI-Generated Graphics on Elections

AI-generated graphics, particularly in the form of deepfakes, can have wide-ranging consequences during election cycles. They have the potential to sway public opinion by creating false narratives that go viral before being debunked. Studies have shown that fake news and visuals tend to spread six times faster than factual information (Scientific American, 2024).

These fabricated images can also impact voter turnout and discredit candidates by creating confusion around their statements and actions. For instance, deepfake videos that misrepresent a candidate’s stance on a key issue can lead to mistrust among voters, even if the video is later proven false (Harvard Kennedy School, 2024). With AI making it easier to generate these manipulations at scale, malicious actors, both domestic and foreign, are leveraging these technologies to target vulnerable voter populations and influence election outcomes (NCSL, 2024).

In this CNN segment, Jake Tapper uses a deepfake of himself to show just how convincing and dangerous AI-generated content can be. The video demonstrates how easy it is to manipulate visuals, making it seem like someone is saying or doing things they never actually did. It’s a clear reminder of how deepfakes can be used to spread misinformation, especially during elections when public opinion is easily influenced. This example underscores the importance of staying alert and informed so we can spot these fakes before they do real damage.

How to Spot AI-Generated Graphics

While AI-generated graphics are becoming more sophisticated, there are several ways to detect and avoid falling victim to them:

1. Look for Unnatural Movements or Facial Features

Deepfakes often struggle to replicate natural human expressions and movements perfectly. When watching a video, pay attention to how the face interacts with light and shadows, as well as subtle movements like blinking or lip-syncing. If something seems off, it may be an AI-generated fake. For example, slight delays in lip movement or inconsistent skin textures across a person’s face can indicate AI tampering (CISA, 2024).

2. Reverse Image Search

If you come across an image that seems suspicious, using a reverse image search tool like Google Image Search or TinEye can help determine its authenticity. These tools allow you to see where the image has been published online and whether it has been altered or manipulated (NCSL, 2024).

3. Verify the Source

Always check where the image or video originated. Is it coming from a reputable news source, or was it shared via a social media post from an unverified account? Images shared on unverified platforms are more likely to be AI-generated or manipulated. Additionally, trusted news organizations typically vet the visuals they use, reducing the likelihood of publishing false or AI-generated content (UNRIC, 2024).

4. AI Detection Tools

Several AI-detection tools have emerged in recent years to help users identify synthetic media. For instance, companies like Adobe have developed authenticity tools, and initiatives like Reality Defender have created deepfake detection algorithms that flag suspicious content (CISA, 2024). Using these tools when you’re uncertain can be an extra layer of defense against misinformation.

The Role of Social Media in Spreading AI-Generated Graphics

Social media platforms like Facebook, Twitter (now X), and Instagram have become major hubs for the rapid dissemination of AI-generated content. These platforms use algorithms that prioritize engagement, meaning highly shareable and often sensational content is more likely to go viral, regardless of its accuracy.

According to a 2024 study by the Center for Democracy & Technology, 60% of voters under 35 are likely to encounter AI-generated visuals at least once during an election cycle. Platforms have started to implement content moderation tools to combat disinformation, but the sheer volume of AI-generated content makes complete detection almost impossible.

The Risks of AI-Generated Memes in the 2024 Election

In November 2023, Vice President Kamala Harris led the U.S. delegation to a pivotal global AI safety summit in the U.K., where she underscored the dangers of AI, including the spread of disinformation through deepfakes. Harris framed these risks not only as technical threats but as existential challenges to democracy, noting how AI tools like deepfakes and voice cloning could be used to manipulate public perception during elections. At the summit, Harris announced the creation of the U.S. AI Safety Institute to address these concerns and develop safeguards around AI technology (Scientific American).

As part of the Democratic platform, Harris and her team have pointed out the dual nature of AI: its ability to bring benefits, such as improved weather prediction, and its potential for harm, such as the creation of disinformation and fraudulent content. These AI-generated memes or deepfakes have become a significant threat in election cycles, as they are often used to sway public opinion before the content is debunked (Scientific American).

A major contrast in the 2024 election is how the candidates approach the use of AI. Harris’s campaign has committed to not using AI-generated content, whereas former President Donald Trump has actively shared AI-generated visuals on platforms like X (formerly Twitter) and Truth Social. One notable instance involved a deepfake falsely claiming an endorsement from Taylor Swift. Swift, who has been targeted by AI deepfakes in the past, publicly condemned this tactic, stating it heightened her fears about AI’s role in spreading misinformation (Scientific American).

These concerns are not limited to celebrities. A recent report from the Center for Democracy & Technology revealed that around 15% of U.S. high school students have encountered sexually explicit deepfakes of their peers, highlighting the pervasive nature of this technology. As AI continues to evolve, voters must critically evaluate how these tools are being used to shape political narratives and influence public opinion.

As we head into the 2024 elections, it’s clear that AI-generated memes and disinformation are issues that voters cannot ignore.

Why Reliable Information Matters During Elections

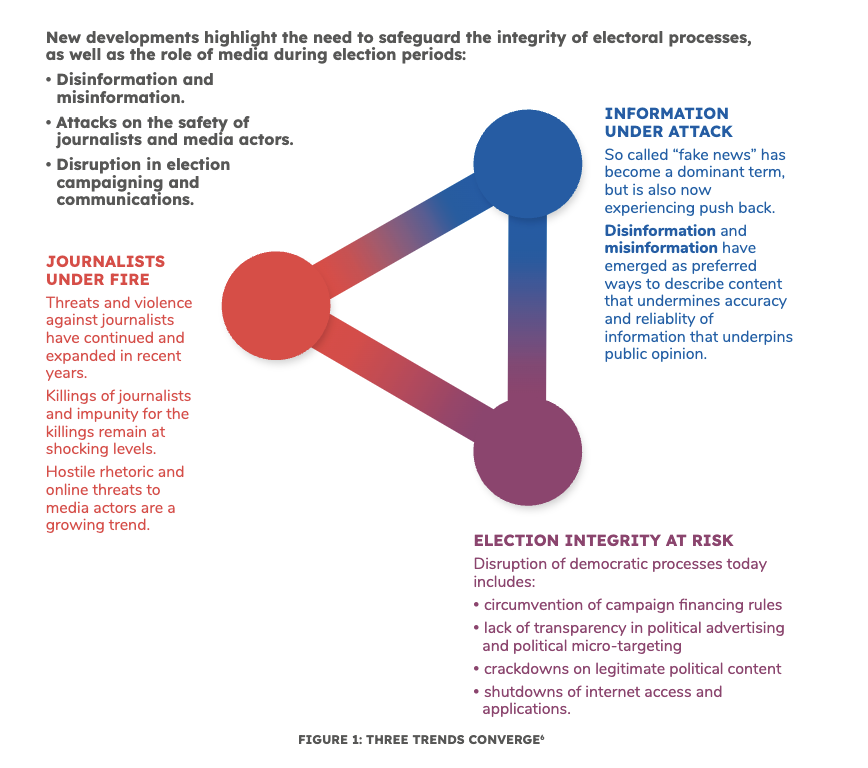

During election season, the information we encounter shapes how we think, vote, and engage in democracy. But with the rise of digital technologies and social media, we’re seeing an increase in misleading content, like deepfakes and misinformation, which can make it difficult to know what’s true. This is why it’s so important to base public discussions on accurate and reliable information, ensuring voters can make informed decisions at the ballot box.

Unfortunately, journalists, who are often the first line of defense against misinformation, face growing challenges. In recent years, they’ve been targeted by hostile rhetoric, threats, and even violence. At the same time, the term “fake news” has been used to undermine the media’s credibility, making it harder for people to trust the information they see. This trend not only impacts journalists but also threatens the flow of accurate information during critical moments like elections.

New technologies, like AI, add another layer of complexity. While these tools can make the election process more efficient, they can also be used to create false content that spreads quickly online. Deepfakes, for example, are videos or images that appear real but have been digitally altered to deceive viewers. With AI, it’s becoming easier to manipulate content, making it crucial for voters to understand how to identify and combat misinformation.

Luckily, organizations like the United Nations are stepping in to help. Through support programs and training, they’re working with election officials, journalists, and governments to ensure they have the tools to protect democratic institutions in this digital age (UNESCO).

As voters, staying informed and critical of what we see online is more important than ever. By relying on trusted sources and being aware of the tactics used to spread false information, we can protect the integrity of elections and ensure our voices are heard accurately.

No Responses