As social media continues to play a growing role in how we consume information, the spread of misinformation has become a significant concern. Social media platforms have had to adopt strategies to address this growing issue. In this post, I will take a look at how two platforms, TikTok and X (formerly Twitter), are tackling misinformation, the strengths and weaknesses of their efforts, and what improvements could be made.

A Pew Research Center study revealed that TikTok is now a primary source of news for many Americans, emphasizing the importance of the platform’s role in shaping public perception. Understanding this responsibility, TikTok has implemented several measures to combat misinformation.

Fact-Checking and Content Moderation

TikTok partners with third-party fact-checkers to ensure that false content is identified and flagged quickly. According to their Misinformation Transparency Report, TikTok has a comprehensive system that combines AI-driven moderation with additional human oversight. This ensures that flagged content goes through multiple levels of verification, reducing the chances of harmful content slipping through the cracks. When content is determined to be misleading, TikTok may either remove it or add a warning label, redirecting users to verified sources for accurate information.

TikTok has made strides in educating its users about misinformation. The platform uses educational banners and hubs that appear when users search for terms or topics that are commonly associated with misinformation. These banners guide users to trusted sources like the World Health Organization (WHO) or national health agencies. TikTok launched a Harmful Misinformation Guide to help users understand how to recognize and report misleading information.

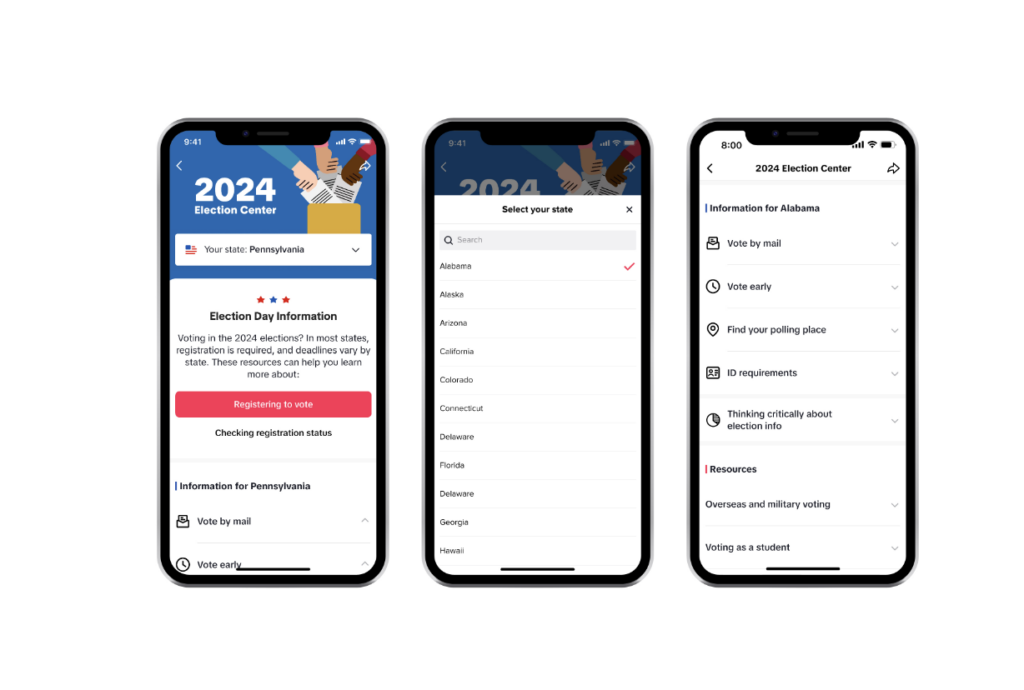

Election Integrity

As we approach major election cycles in many countries, TikTok has ramped up efforts to protect election integrity. In a recent update from TikTok, the platform claims it is taking steps to limit the spread of false information about elections by partnering with election monitoring organizations. They have set up dedicated teams to monitor for harmful content in real time, while educational banners continue to guide users toward accurate, official sources of election information. This initiative is important given the platform’s reach and the influence it has on younger demographics, many of whom may be first-time voters.

From my own observations and research, TikTok’s policies seem to work to an extent, especially when it comes to labeling and flagging specific types of content. For instance, I’ve noticed some content labeled as AI-generated, which helps users differentiate between authentic content and digitally created material. However, misinformation seems to slip through the cracks more often than it should. Despite their efforts to identify and flag content, I haven’t seen a significant amount of misinformation-related content being flagged with “banners”. This discrepancy may be due to the limitations of TikTok’s current systems, which rely heavily on AI and automated tools.

I’ve come across cases where creators have had their content flagged incorrectly, resulting in videos being removed from the “For You” page or becoming harder to find in search results. A TikTok creator claimed one of her videos was flagged for misinformation, leading to its limited visibility on the platform. While this shows that TikTok is flagging some content, it raises questions about the platform’s consistency and whether their approach is robust enough to keep up with the platform’s growth and evolving user base.

X: A Shifting Approach to Misinformation

Since Elon Musk took over and rebranded Twitter as X, the platform’s approach to handling misinformation has shifted significantly. Once known for its active role in flagging and removing false content, X has rolled back several key efforts, leaving many wondering how the platform is now equipped to combat the spread of misinformation.

Community Notes

One of X’s tools for tackling misinformation is Community Notes (previously known as Birdwatch). This feature lets users add context or corrections to misleading or incomplete posts. It’s a crowdsourced fact-checking system that aims to keep the platform more accurate by letting the community provide clarifications directly on posts. In theory, it sounds great because it gives users the power to correct misinformation themselves.

However, there are some potential problems with this system. Since it’s community-driven, the accuracy of the notes depends on who is contributing. This can lead to inconsistencies, especially when the topic is politically sensitive or polarizing. If not enough users participate, misinformation can still spread unchecked. While Community Notes is a great concept, it might not be enough to handle the massive volume of misinformation on X, especially now that other moderation tools have been reduced.

Before Musk’s takeover, Twitter had implemented several policies aimed at combating misinformation, particularly around sensitive topics like COVID-19 and elections. In 2021, Twitter launched a feature allowing users to flag misleading content, specifically targeting misinformation related to COVID-19 and the U.S. elections. According to Variety, this feature gave users a direct role in identifying and reporting misinformation, which helped the platform address harmful content more quickly. This added layer of moderation created a sense of shared responsibility, where users felt empowered to help reduce misinformation.

One of the biggest concerns is the removal of the feature that allowed users to directly report misinformation. According to a CNN report, this tool was key in helping users flag harmful or false content. Without this reporting option, X relies even more heavily on user-driven tools like Community Notes, which may not catch everything. This is worrying as we near the 2024 presidential election. Another CNN article highlighted concerns over how X will handle election-related content. Now that X has announced it will allow political ads for the 2024 U.S. elections, there are concerns that without proper moderation, misinformation could spread quickly during such a critical time.

The Role of Verified Accounts in Spreading Misinformation

One of the more controversial moves under Musk’s leadership has been the change in how verification works. Previously, the blue checkmark was a way to confirm that an account was trustworthy or belonged to a notable person or organization. Now, with the introduction of paid verification, anyone can get verified, regardless of credibility. This has made it easier for misinformation to spread, especially from verified accounts that users might assume are trustworthy.

For example, during the recent Israel-Gaza war, some ProPublica reports showed that verified accounts were spreading false information about the conflict. These verified accounts often reach a wider audience, therefore increasing the spread of misinformation. With fewer checks in place, it’s becoming harder to trust what’s being shared on X, even from accounts that appear to be credible.

X’s changes haven’t gone unnoticed by regulatory bodies either. The European Union has raised concerns about the platform’s ability to control disinformation, especially in light of the Digital Services Act, which holds platforms accountable for moderating harmful content. The EU has flagged X for not meeting its disinformation standards, putting the platform under pressure to prove it can handle the spread of false information.

X’s approach to combating misinformation is more controversial compared to TikTok’s. Although Community Notes offers a crowdsourced method of fact-checking, it lacks the oversight that third-party fact-checkers provide. Additionally, the reduction in content labeling and the rollback of moderation policies have made it more difficult for users to identify and avoid misinformation.

From my experience using X, I’ve observed that misinformation, especially during major political events or health crises, circulates more freely than it did in the past. The absence of clear labels or warnings makes it harder for users to quickly gauge the reliability of the information they encounter online.

Final Thoughts

Both platforms could improve by making their efforts more transparent and focused on users. For example, TikTok could work on improving its AI moderation tools to reduce mistakes where content is wrongly flagged. X, on the other hand, could reinstate its labeling system to help users identify potentially misleading content more easily. Community Notes needs to be accompanied by reputable fact-checking efforts to ensure accuracy. Reintroducing stricter moderation for accounts that repeatedly spread false information could also help curb misinformation on the platform.

Overall, TikTok and X have taken steps in the fight against misinformation, but their approaches are vastly different. TikTok relies on a mix of AI moderation, partnerships with fact-checkers, and educational tools, while X has pivoted toward a more community-driven model. Each platform has strengths and weaknesses, and there is still room for improvement to create healthier online communities.

No Responses